Last week, I had the honor of representing the Jewish people at the AI Ethics for Peace Conference in Hiroshima, Japan, a three day conversation of global faith, political and industry leaders. The conference was held to promote the necessity of ethical guidelines for the future of artificial intelligence. It was quite an experience.

During the conference, I found myself sitting down for lunch with a Japanese Shinto Priest, a Zen Buddhist monk and a leader of the Muslim community from Singapore. Our conversation could not have been more interesting. The developers who devised AI can rightfully boast of many accomplishments, and they can now count among them the unintended effect of bringing together people of diverse backgrounds who are deeply concerned about the future their creators will bring.

AI promises great potential benefits, including global access to education and healthcare, medical breakthroughs, and greater predictability that will lead to efficiencies and a better quality of life, at a level unimaginable just a few years ago. But it also poses threats to the future of humanity, including deepfakes, structural biases in algorithms, a breakdown of human connectivity, and the deterioration of personal privacy.

Will AI lead us to an era of human flourishing, or will it create profound despair? Will it be used to augment the best of humanity, or will it encourage our worst tendencies? Armed with this new technology, are we marching forward into an age of ever-deeper and more productive global connection, or will it drive our already polarized world even farther apart?

The rapid development of this transformative new technology has put us all on a course toward an unknown future, where tremendous benefits await but the real risks have not fully been accounted for.

Brad Smith, president of Microsoft, brought the lessons of faith to the concerns of industry by noting at the conference that eight out of the 10 Commandments are about what can go wrong. The lesson, he said, is that the best way to solve a problem is to first worry about it — as we were gathered to do, preemptively, about the risks of AI.

I was particularly moved by the setting of our conversation. Taro Kono, Japan’s minister of digital transformation, pointed out that the host city, a now-vibrant metropolis, is a living example of both the mass destruction that can be wrought by technology and the indestructible human capacity to come together and build anew.

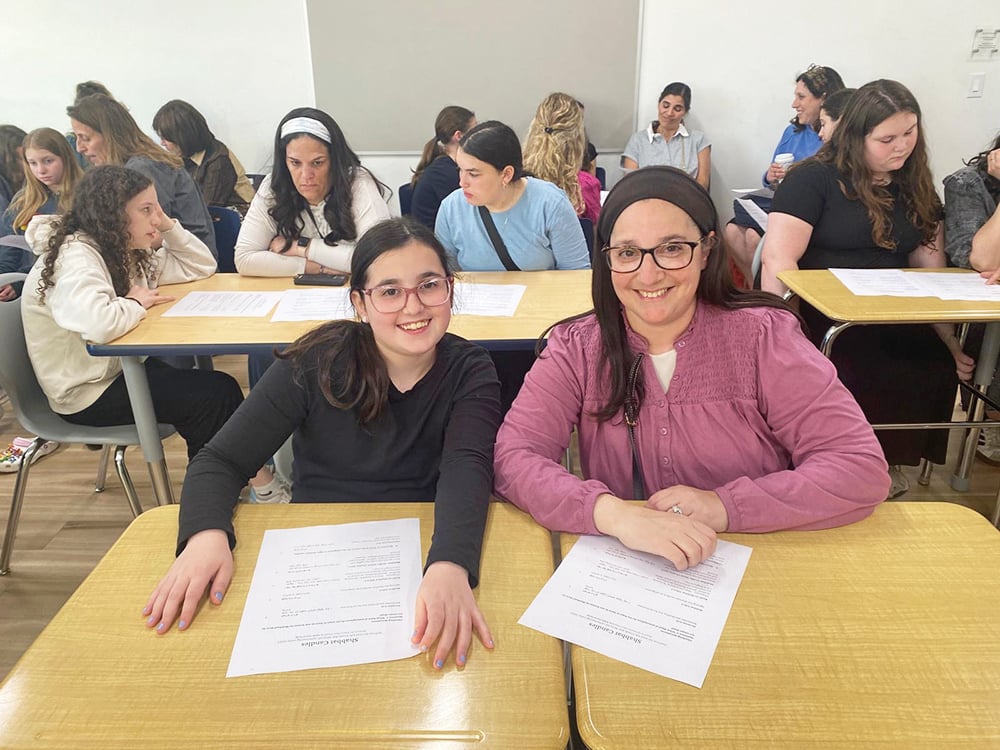

The climax of our three days came when the assembled leaders signed onto the central document, known as the Rome Call, which outlines the core principles necessary for ethical AI: transparency, inclusion, accountability, impartiality, reliability, privacy and security.

Standing together with our Jewish delegation, including distinguished rabbinic and scientific thought leaders from YU as well as representatives of the chief rabbinate of Israel, the gathering reflected an opportunity to sanctify Hashem’s name in the global sphere. For me, it also reflected the beginning of hope for the future as it stood as an implicit response to an unanswered question asked at the dawn of history. In the Torah, Kayin responds to God’s inquiry about the whereabouts of Hevel by asking, “Am I my brother’s keeper?” While hard, detailed work remains to embed these consistent ethical principles into AI’s development, by signing the Rome Call and committing to working together, the leaders who gathered in Hiroshima — collectively representing the majority of the peoples of the world — were answering this biblical question: Yes, we are our brothers’ keepers, and that belief is the first step needed to bring about a better tomorrow for all.

In 1964, our teacher Rabbi Joseph B. Soloveitchik published an essay directing conversations between faiths to be “in the public world of humanitarian and cultural endeavors … on such topics as war and peace, poverty, freedom … moral values.. secularism.. technology … [and] civil rights.” It was a call not for theological debates, which would lead to dilution and misrepresentation, but for productive discussions in areas of universal concern, for which common language and purpose must be found. Today, such a dialogue is necessary — but insufficient. The global implications of AI are so great that this moment requires a common language not only among faiths but between faith and our entire society.

As the flagship Jewish university, housing world class talmedei chachamim and top tier scientists, YU is naturally the address to represent the Jewish people in these kinds of conversations which highlight the unique way in which our deep roots in the mesorah nourish and inform our forward focus in areas such as AI and the sciences. My experience has shown me time and time again that people are very interested in what the Jewish tradition has to say about the compelling issues of the day. With all of the talk of the rise of antisemitism, about which we need to remain vigilant and which we need to consistently combat, we often do not talk enough about philo-semitism, and the way we can influence the society around us.

Our students are our future because they are uniquely prepared – through their character and education – to become the leaders of tomorrow and participate in these kinds of global conversations to represent the Jewish people and glorify Hashem’s name in the world.

Rabbi Dr. Ari Berman is the president of Yeshiva University.