Empathy is at the heart of human social life. It allows us to respond appropriately to others’ emotions and mental states. A perceived lack of empathy is also one of the symptoms that defines autism. Understanding this is key to devising effective therapies.

While empathic behavior takes many forms, it is worthwhile to note at least two main sets of processes that are involved in empathizing. One of these processes is a bottom-up, automatic response to others’ emotions. The classic example of this is breaking into giggles upon seeing another person giggle, without really knowing the reason why. The other is a top-down response, where we need to work out what another person must be feeling—a bit like solving a puzzle.

My research focuses on the bottom-up automatic component of empathy. This component is sometimes called “emotional contagion”. Emotional contagion happens spontaneously, and has important consequences for social behavior. It helps us understand another person’s emotion expression better by “embodying” their emotion.

It also helps build social bonds; we bond more with those who smile and cry with us. But what factors determine who we spontaneously imitate? And what makes some people spontaneously imitate more than others? This is particularly relevant for understanding some of the behavioral features of autism, which has been associated with a lack of this spontaneous imitation.

Empathy and autism

One factor that has been suggested to play a central role in how much we spontaneously mimic another person is how rewarding that other person is to us. Anecdotally, it is noted that people spontaneously imitate their close friends more than strangers. In a set of experiments, we tested this suggestion by manipulating the value participants associate with different faces, using a classic conditioning task.

Some faces were paired with rewarding outcomes (for example these faces would appear most of the times you win in a card game) while others were paired with unrewarding outcomes (these faces would appear most times you lose). Following the conditioning task, people were shown happy faces made by the high-reward and the low-reward faces. Using facial electromyography (a technique that records tiny facial muscular movements that cannot often be detected by the naked eye), we found that individuals showed greater spontaneous imitation of rewarding faces compared to faces conditioned with low reward.

Crucially, this relationship between reward and spontaneous imitation varied with the level of autistic traits. Autistic traits measure the symptoms of autism in the general population. These are distributed across the population, with individuals with a clinical diagnosis of autism represented at one end of this spectrum. In our study, people with high autistic traits showed a similar extent of spontaneous imitation for both types of face, while those with low autistic traits showed significantly greater imitation for high-reward faces.

In another group of volunteers, we did this same experiment inside the MRI scanner. We found that autistic traits predicted how strongly the brain areas involved in imitation and reward were connected to each other, when people were looking at the high-reward and the low-reward faces.

The emerging picture from this set of studies suggests the reduced spontaneous imitation seen in autism may not represent a problem with imitation as such, but one due to how the brain regions involved in imitation are connected to those that are involved in processing rewards. This has important implications for designing of autism therapy, many of which use a reward-learning model to encourage socially appropriate behavior.

The future of brain imaging

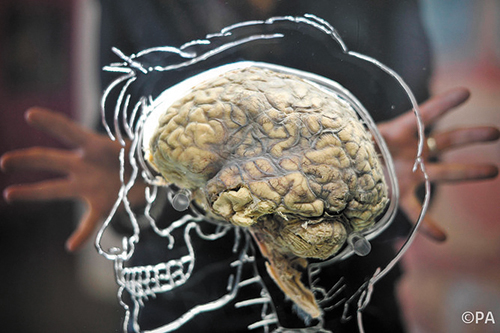

New technologies are constantly expanding the scope of experiments and the inferences we draw from them. Human brain imaging is now being done at a resolution higher than ever before in multiple international initiatives (for example in the Human Brain Project).

A high resolution map of the human brain will allow a more detailed insight into the nature of these neural connections. This, in turn, could provide targets for potential future interventions. Another aspect where new technologies will change the landscape of this research is computational, one that will allow us to combine insights from different techniques.

At this point, there is no standard model to combine data across different techniques that we use routinely in our research (for example facial electromyography, functional MRI, eyegaze tracking). Using computers to build such models that allow a combination of the results from different techniques will help generate insights far beyond that possible for each individual technique.

Decoding the brain, a special report produced in collaboration with the Dana Centre, looks at how technology and person-to-person analysis will shape the future of brain research.

The Conversation is funded by the following universities: Aberdeen, Birmingham, Bradford, Bristol, Cardiff, City, Durham, Glasgow Caledonian, Goldsmiths, Lancaster, Leeds, Liverpool, Nottingham, The Open University, Queen’s University Belfast, Salford, Sheffield, Surrey, UCL and Warwick. It also receives funding from: Hefce, Hefcw, SAGE, SFC, RCUK, The Nuffield Foundation, The Wellcome Trust, Esmée Fairbairn Foundation and The Alliance for Useful Evidence

By Bhismadev Chakrabarti (He receives funding from Medical Research Council UK)